Introduction

For Day 4, I worked on feature engineering: creating new features that help models perform better.

I also compared different model families: Logistic Regression, Decision Tree, and Random Forest.

Why It Matters

Feature engineering is one of the most important skills in ML.

The quality of your features often matters more than the choice of algorithm.

Approach

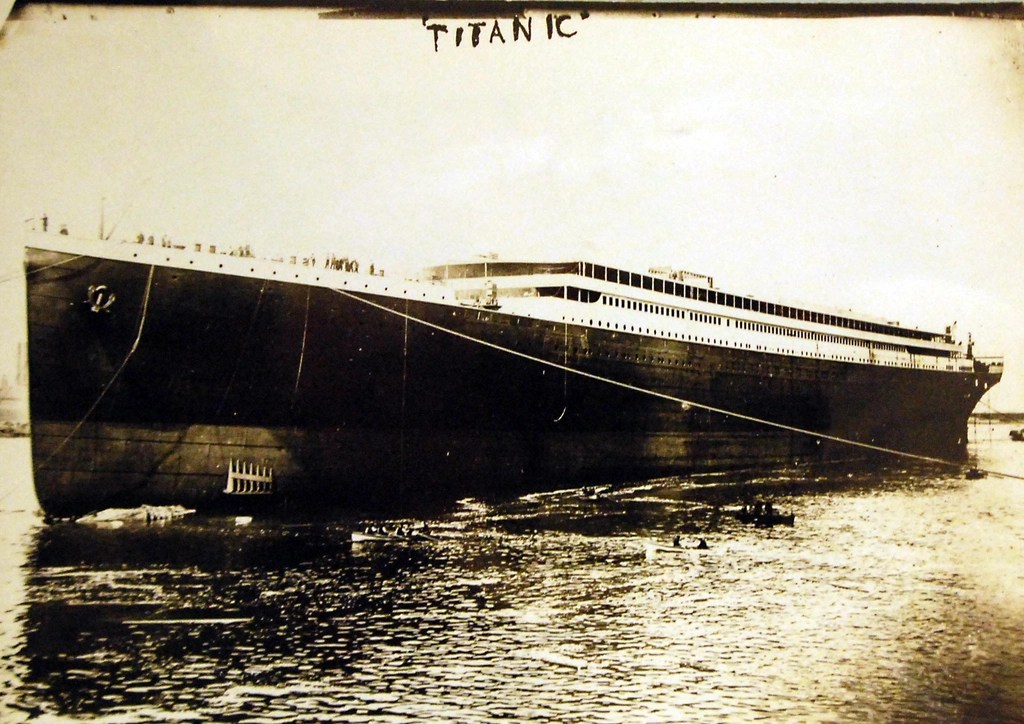

- Dataset: Titanic

- New features:

family_size,is_child,fare_per_person - Models: Logistic Regression, Decision Tree, Random Forest

- Validation: Stratified 5-fold CV

- Evaluation: Accuracy, F1, ROC-AUC

- Visualization: ROC overlay of all models

Results

Random Forest outperformed the simpler models, and the engineered features gave all models a boost. The ROC overlay made the performance gap clear.

Takeaways

- Small, thoughtful features can have a big impact.

- Tree-based models are flexible and benefit from engineered features.

- Comparing models side by side highlights trade-offs.

Artifacts

Video walkthrough

Leave a comment